Previous Month | RSS/XML | Current | Next Month

WEBLOG

October 30th, 2014 (Permalink)

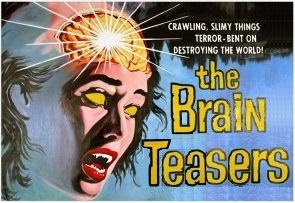

A Hallowe'en Costume Contest Puzzle

The four finalists in the All-Hallows' Eve costume contest were traditional costumes:

- There was a vampire who looked much like Count Dracula;

- A Frankenstein's monster, though more in the Hammer mode than the Karloff;

- A person wrapped in white gauze who was either supposed to be a bloody mummy or the victim of a terrible accident;

- And, finally, a werewolf-woman.

Three friends who had come to see the contest discussed the finalists:

Alice: I don't know who's going to win, but it sure won't be that pathetic Dracula!

Bob: I think either the mummy or the werewolf will win.

Carol: You're both nuts! It's definitely going to be either the vampire or the Frankenstein monster.

In the event, it turned out that only one of the three friends was right. Which costume won the contest?

October 29th, 2014 (Permalink)

Don't nudge, judge!

This is the fourth and, I expect, last entry on Steven Poole's "Not So Foolish" article―see the Source, below―lest I end up writing more words than are in the article itself. For the three previous entries, see the links below. In this entry, I want to finally discuss what Poole has to say about "nudging", which was a large part of my original motivation for focusing on this article.

Several years ago I wrote a "Book Club" series on Richard Thaler and Cass Sunstein's book Nudge―see links to the entries, below. The book was in the news at the time because Sunstein was an adviser to candidate, then President, Obama and later served for awhile in the administration. For this reason, it was thought that "nudging" was the coming thing, and that we would all be getting elbowed in the ribs by the government during the Obama administration. However, in the intervening six years I've seen little if any evidence of "nudging" done by the federal government. Obamacare, the centerpiece achievement of the current president, doesn't seem very nudgy: it has an individual mandate, not an individual nudge. While certainly paternalistic, Obamacare is not exactly libertarian, though it's more libertarian than a national health service would be.

Now, Sunstein has a new book titled Why Nudge?, based on a lecture series he gave a couple of years ago. One of my disappointments with the first book was its failure, despite having a penultimate chapter dealing with objections to nudging, to deal with what seemed to me to be some obvious counter-arguments. So, I'm curious whether Sunstein, in the new book, will finally get around to addressing these doubts, or at least acknowledging their existence.

Poole's article pleases me partly because he raises some of the same objections as I had, and does so more forcefully. Unfortunately, it arrives too late to nudge Sunstein into addressing these legitimate objections in his new book, but at least it may give greater publicity to them. Here are the main unanswered objections:

- Sunstein has failed to consider the alternative to nudging of "debiasing", that is, educating people to be less nudgeable by teaching them to be less at the mercy of the cognitive biases that make nudging possible. Is debiasing possible? If it is, then why shouldn't we try to debias people into making better decisions rather than nudging them? Here's Poole raising the same issue:

…[S]ince nudging depends on citizens ordinarily following their automatic biases, its efficacy would be undermined if we could actually overcome our biases on a regular basis. And can we? That remains a matter of debate. Many researchers think that we can improve our chances of employing rational processes in certain situations simply by reminding ourselves of the biases that might be triggered by the present problem.

I'm certainly one of those who thinks that people can to some extent, if not completely, overcome their cognitive biases. The Fallacy Files is based on the notion that "we can improve our chances of employing rational processes in certain situations simply by reminding ourselves" about mistakes in reasoning "that might be triggered by the present problem."

- If the government adopts nudging as a technique for achieving its goals, then it will have an incentive against debiasing. Do we want a government that is so invested in people being easily herded by nudges that it may seek to keep them uneducated? Here's Poole:

Nudging depends on our cognitive biases being reliably exploitable, and [debiasing] would interfere with that. In this sense, nudge politics is at odds with public reason itself: its viability depends precisely on the public not overcoming their biases.

- My final objection, which is not discussed by Poole, is a moral argument against the paternalism involved in nudging: to treat people paternalistically is to treat them as children. We treat children the way we do because they lack the knowledge and abilities they need in order to avoid danger and make rational decisions for themselves. The main purpose of education is to teach children the know-how and factual knowledge that they will need to safely navigate a dangerous world. Once they have acquired that knowledge, we turn them loose in that world and they are free people.

Most laws are aimed at preventing adults from harming others, not from harming themselves, so they are not justified paternalistically. In contrast, using nudges on adults supposedly for their own good seems to be based on the notion that cognitive biases show that people never grow up, and therefore should be treated as children for the rest of their lives. If debiasing is impossible, perhaps this is true, but Sunstein has not argued for that impossibility.

Adults may prefer to learn from their own mistakes rather than have the government treat them as children. No doubt getting poked in the ribs is better than being hit over the head with a truncheon, but that doesn't mean it's not annoying, even if it's supposedly for your own good.

I don't want to suggest that Sunstein has no answers to these objections; rather, I'm asserting that up to now he simply has not addressed them at all. Perhaps the new book will remedy this defect.

I'll give Poole the last word:

…[E]ven if we each acted as irrationally as often as the most pessimistic picture implies, that would be no cause to flatten democratic deliberation into the weighted engineering of consumer choices, as nudge politics seeks to do. On the contrary, public reason is our best hope for survival. Even a reasoned argument to the effect that human rationality is fatally compromised is itself an exercise in rationality. Albeit rather a perverse, and…ultimately self-defeating one.

Source: Steven Poole, "Not So Foolish", Aeon Magazine, 9/22/2014

Previous Entries in this Series:

- Wink-Wink, Nudge-Nudge, 9/29/2014

- The "Linda Problem" Problem, 10/2/2014

- The Great Pumpkin, 10/13/2014

Book Club: Nudge:

- Introduction

- Chapter 1: Biases and Blunders

- Chapter 2: Resisting Temptation

- Chapter 3: Following the Herd

- Chapter 4: When Do We Need a Nudge?

- Chapter 17: Objections

Update (11/5/2014): The New York Review of Books has a review of Sunstein's new book by Jeremy Waldron, together with a letter to the editor from Sunstein in response and Waldron's answer―see the Sources, below. According to the review, Sunstein addresses the final of the three concerns that I mentioned above, but Waldron is unimpressed:

Sunstein does acknowledge that people might feel infantilized by being nudged. He says that “people should not be regarded as children; they should be treated with respect.” But saying that is not enough. We actually have to reconcile nudging with a steadfast commitment to self-respect.

Of course, I haven't read the new book yet, so I don't know how convincing it is. However, taken together with Poole's article, this review shows that the three concerns that I raised in the original book club entries should not be ignored or simply brushed aside. These same misgivings have been raised independently by at least three different readers of Sunstein's writings on nudging. Waldron also draws an important connection between two of the problems:

Consider the…heuristics―the rules for behavior that we habitually follow. Nudging doesn’t teach me not to use inappropriate heuristics or to abandon irrational intuitions or outdated rules of thumb. It does not try to educate my choosing…. Instead it builds on my foibles. It manipulates my sense of the situation so that some heuristic―for example, a lazy feeling that I don’t need to think about saving for retirement―which is in principle inappropriate for the choice that I face, will still, thanks to a nudge, yield the answer that rational reflection would yield. Instead of teaching me to think actively about retirement, it takes advantage of my inertia. Instead of teaching me not to automatically choose the first item on the menu, it moves the objectively desirable items up to first place. I still use the same defective strategies but now things have been arranged to make that work out better. Nudging takes advantage of my deficiencies in the way one indulges a child.

In other words, in addition to treating us as children, nudging instead of debiasing is likely to infantilize us, making it less likely that we will ever grow up. As Waldron writes at the end of the review: "I wish…that I could be made a better chooser rather than having someone on high take advantage (even for my own benefit) of my current thoughtlessness and my shabby intuitions."

I have one nitpick with the review: when he discusses drunk driving, Waldron doesn't seem to understand the notion of an order of magnitude difference: "…[I]n 2010, the number of people who were killed in alcohol-impaired driving crashes…was an order of magnitude lower than that, i.e., almost one ten thousandth of the number of incidents of DWI." This greatly understates the difference, since one ten-thousandth is actually four orders of magnitude less, because 10,000 = 104.

Sources:

- Jeremy Waldron, "It’s All for Your Own Good", The New York Review of Books, 10/9/2014

- Cass R. Sunstein & Jeremy Waldron, "Nudges: Good and Bad", The New York Review of Books, 10/23/2014

October 28th, 2014 (Permalink)

Hallowe'en Headline

Grieving Parents Shocked When Dead Son Answers The Door

"The paw!…The monkey's paw!"

Sources:

- W. W. Jacobs, "The Monkey's Paw"

- Suzy McCoppin, "Grieving Parents Shocked When Dead Son Answers The Door", Pop Dust, 10/12/2014

October 22nd, 2014 (Permalink)

Check Your Sanity!

A keen sense of proportion is the first skill―and the one most bizarrely neglected―for seeing through numbers. Fortunately, everyone can do it. Often all they have to do is think for themselves.―Michael Blastland & Andrew Dilnot, The Numbers Game

It's time once again to get out your old envelopes or used cocktail napkins, and go figure! A valuable critical thinking skill is to be able to check numerical claims made in the media for plausibility, a process which is sometimes called a "sanity check". You might be surprised at how many won't even stand up to such a check, for the journalists who write media stories are often innumerate or number-phobic. For this reason, don't assume that reporters will check the numbers for you; we've seen many examples in the past―see the Resources, below―where they did not. Also, don't be afraid of the math! To do a sanity check seldom requires any advanced mathematics, and definitely does not require any in this case: if you can add, subtract, multiply, and divide, you can check the sanity of the following number.

The best way to learn how to do such number checks is to see how it's done and work a few yourself. As mentioned, you won't need to learn any new math; you'll just need to learn how to apply what you already know. Here's the number that we want to check for sanity, taken from Michael Blastland and Andrew Dilnot's excellent book The Numbers Game: "In 1997 the British Labour government said it would spend an extra £300 million (about $600 million) over five years to create a million new child care places."

Is this number sane? The question we want to answer is: Is $600 million over five years a large enough amount of money to create a million new child care places, that is, is that amount of funding for day care centers and other child care providers enough to lead to an additional million spots being available for parents who need day care?

How can you answer this question using simple math and what you already know? Most of us do not have experience with large numbers that would allow us to put them into perspective; we lack an intuitive feel for the difference between millions, billions, and trillions. So, one approach to dealing with large numbers is to use math to translate them into smaller numbers that we do have a feel for. Give it a shot, then when you're ready click on "Sanity Check" below to see one way to do it:

Source: Michael Blastland & Andrew Dilnot, The Numbers Game: The Commonsense Guide to Understanding Numbers in the News, in Politics, and in Life (2009), pp. 12-15

Resources:

- Caveat Lector, 6/25/2007

- The Back of the Envelope, 5/29/2008

- Who is Homeless?, 3/30/2009

- The Back of the Envelope, 7/2/2009

- The Back of the Envelope, 8/15/2009

- BOTEC, 11/14/2010

- How Not to Do a "Back of the Envelope" Calculation, 11/18/2010

- BOTEC, 2/6/2011

- Go Figure!, 3/6/2011

- Be your own fact checker!, 2/15/2012

October 21st, 2014 (Permalink)

New Book: Arguing with People

Arguing with People is a new book by philosopher Michael A. Gilbert, also author of How to Win an Argument: Surefire Strategies for Getting Your Point Across. As is suggested by the subtitle of the latter, both appear to focus on practical argumentation.

October 13th, 2014 (Permalink)

The Great Pumpkin

This is the third entry on Steven Poole's "Not So Foolish" article―see the Source, below, and the Resources for the two previous entries. The section of the article that I want to comment on is short, so I suggest reading or re-reading it, but here are the most important parts:

One interesting consequence of a wider definition of ‘rationality’ is that it might make it harder to convict those who disagree with us of stupidity. …Dan M Kahan, a professor of law and psychology, argues that people who reject the established facts about global warming and instead adopt the opinions of their peer group are being perfectly rational in a certain light:Nothing any ordinary member of the public personally believes about […] global warming will affect the risk that climate changes [sic] poses to her, or to anyone or anything she cares about. […] However, if she forms the wrong position on climate change relative to the one [shared by] people with whom she has a close affinity―and on whose high regard and support she depends on [sic] in myriad ways in her daily life―she could suffer extremely unpleasant consequences, from shunning to the loss of employment. Because the cost to her of making a mistake on the science is zero and the cost of being out of synch with her peers potentially catastrophic, it is indeed individually rational for her to attend to information on climate change in a manner geared to conforming her position to that of others in her cultural group.

I've omitted a paragraph comparing this situation to "the tragedy of the commons", which is a useful exercise if you're familiar with the "tragedy" but probably not otherwise. Also, while that analogy draws a distinction between individual and group rationality, that's not the distinction that I want to call attention to. I think Poole―and possibly Kahan, as well―is confusing two distinct types of individual rationality:

- The rationality of a belief: If the woman in the example comes to her belief in the wrong way, then her belief may be irrational. For instance, suppose that she thinks to herself: "My peers all disbelieve in climate change, and they would shun me if I believed in it, therefore I won't believe in it." In other words, the woman forms her disbelief using the appeal to consequences―see the Fallacy, below. The belief itself is not based on adequate or relevant evidence, therefore it is irrational.

- The rationality of believing: In contrast, despite the fact that the belief itself is irrational, it may still be rational for the woman to believe it, at least in the narrow, economic sense of "rational".

Perhaps a different example will make the distinction clearer: Let's take as our example of an irrational belief the existence of The Great Pumpkin. Presumably, we can all agree that there is not sufficient credible evidence of its existence, such that to believe in The Great Pumpkin is to believe in something irrational, that is, such a belief is irrational in sense 1, above.

Now, suppose that a rich man were to offer you a million dollars if you believe in The Great Pumpkin. Put aside the objection that you can't believe something by an act of will, which may be true but is beside the point. Also, to answer the objection that no one can see inside your head to tell if you really believe something, let's suppose that the rich man has a "psychoscope" that allows him to read your beliefs. So, in order to get the million dollars, you must really believe in The Great Pumpkin.

Clearly, it would be rational for you to believe in The Great Pumpkin, given that you stand to gain a million dollars and lose very little by so believing. Perhaps you'd be rather embarrassed by believing it, but a million dollars ought to help make up for that: you can cry all the way to the bank, as someone once said. So, it is rational for you to believe in The Great Pumpkin, but the belief itself is still irrational.

In other words, there are at least two different ways that a belief can be rational, and these ways may conflict. In the first way, it is rational to hold the belief because the belief itself―that is, the proposition that is believed―is supported by sufficient evidence. In the second way, it is rational to believe the proposition because of the good or bad consequences that may follow from believing or disbelieving it.

Now, there is no guarantee that these two types of rationality will always go together. There is, of course, no problem when one believes a proposition that is supported by appropriate evidence and expects good consequences from doing so. Similarly, we would all surely condemn believing a proposition for which one has no good evidence, neither expecting good nor fearing bad consequences of believing it.

What we have to wonder about, then, are when these types of rationality misalign: Believing something for which one has inadequate evidence because of either good consequences one expects from believing, or bad consequences one fears from disbelieving. This is what's happening in the case of The Great Pumpkin, and that of the woman who doesn't believe in climate change. We needn't dwell on the case of those who persist in believing something for which they have good evidence in the face of threats of bad consequences or offers of rewards for not believing―they have their reward.

To return to Poole, I'm certainly on his side in thinking that we shouldn't be so quick to accuse those we disagree with of stupidity. For one thing, much of the time this is simply incorrect. It may indeed be rational, in the second sense discussed above, for the woman in Poole's example to believe what her peer group believes, or at least "to attend to information…in a manner geared to conforming her position to that of others in her cultural group". However, as far as I can see, this is no objection to the psychological work that Poole is criticizing. Instead, Poole is describing a particular way that people come to hold irrational beliefs, namely, it can be economically rational to believe a proposition that is poorly supported by evidence.

Source: Steven Poole, "Not So Foolish", Aeon Magazine, 9/22/2014

Resources:

- Wink-Wink, Nudge-Nudge, 9/29/2014

- The "Linda Problem" Problem, 10/2/2014

October 8th, 2014 (Permalink)

Wikipedia Watch

Here's a follow-up to last month's entry on Neil DeGrasse Tyson's contextomy of President Bush―see the Resource, below. Wikipediocracy has an instructive article about the controversy on Wikipedia as to whether its biography of Tyson should mention the quote controversy―see the Source, below. Check it out.

As I write this, Wikipedia's biography of Tyson contains no reference at all to the Bush contextomy. One could justify this on the basis that the issue is too unimportant to include in a biography, which is indeed how some of its "editors" have argued against its inclusion on the article's "Talk" page. However, the article manages to mention that Tyson has been portrayed in an issue of Action Comics, as well as having appeared in an episode of The Big Bang Theory. It even notes Tyson's appearance as keynote speaker at "The Amazing Meeting", but without noticing the controversy that resulted from his speech. So, if this is any indication of Wikipedia's standards of significance, it's rather hard to believe that the contextomy just isn't important enough to merit, say, a short paragraph.

However, there's the additional objection that the "editors" are apparently unable to find reliable evidence that a controversy even exists, let alone that Bush was quoted out of context. This, despite the fact that Tyson has now admitted and apologized for misquoting Bush. Apparently, even Tyson himself isn't a sufficiently reliable source about what he said, since he only self-published his apology on his Facebook page! In contrast, we learn from the biography the important fact that Tyson won a gold medal in ballroom dancing while in college, despite there being no source at all cited. By the way, I'm available for remedial instruction in researching and evaluating evidence.

The "Talk" page debate suggests that Wikipedia is in danger of turning into, if it hasn't already become, a kind of Tower of Babel. While this entire issue could have been dealt with in a few sentences, and probably less than a hundred words in the article itself, thousands of words have been exchanged on the "Talk" page arguing back and forth about whether to include those hundred words. All of which suggests that if you must check Wikipedia, then you should also check the "Talk" page of any article you read to see how the sausage got made. You may be amazed by both what was put in and what was left out.

Source: Hersch, with research assistance from Eric Barbour & Andreas Kolbe, "'Our Wikipedia is the Wikipedia who defamed the stars'", Wikipediocracy, 10/5/2014

Resource: The Bushisms Strike Back!, 9/21/2014

Previous Wikipedia Watches: 6/30/2008, 10/22/2008, 1/25/2009, 3/22/2009, 5/16/2009, 7/21/2009, 1/9/2013, 10/24/2013, 5/2/2014, 7/21/2014

October 2nd, 2014 (Permalink)

The "Linda Problem" Problem

This is a sequel to the previous entry on Steven Poole's "Not So Foolish" article―see the end of that entry for a link. Instead of tacking it on as an "update", I've decided to make it a separate entry, since the famous "Linda problem" has interest in its own right.

As part of his criticism of skepticism about human reason, Poole critiques the well-known "Linda problem". His argumentative strategy here seems to be to undermine the claims of cognitive psychologists to have found faults in most people's thinking. In the case of the Linda problem, he argues that the answer often given by people to the problem, which is judged to be erroneous by psychologists, may actually be rational after all.

Poole introduces his criticism of the Linda problem by associating it with a definition of rationality from economics. Now, I think there are good reasons to be doubtful about the economic definition of rationality, which certainly seems too narrow if nothing worse. However, it doesn't appear that the Linda problem―or other pieces of evidence for cognitive errors involving judgments of probability―has anything specifically to do with economic "rationality". It's true that the work of psychologists on cognitive biases has been used to cast doubt on whether people are rational in the narrow economic sense, but the Linda problem suggests that people judge probabilities in ways that conflict with probability theory, which is a much deeper failure of rationality.

Poole's criticism of the psychologists' interpretation of the Linda problem is in terms of conversational implications of the way the original problem was worded. "Conversational" implications are implications of the fact that something was said, or the way it was said, or in this case of something that was not said, as opposed to implications of what was said. So, in the Linda problem to say that Linda is a bank teller and to say nothing else may be taken as implying that she's not a feminist.

This is a familiar objection, as it was discussed in Massimo Piattelli-Palmarini's book Inevitable Illusions from twenty years ago. For this reason, I won't discuss the objection in detail, as you can read Piattelli-Palmarini's book if you're interested in the details. Anyway, the conjunction "fallacy" is still a mistake in probability theory, and at worst this objection shows that the "fallacy" may be a less common one than the experiments seem to show. It may even be the case that the mistake is not common enough to merit the term "fallacy".

So, let's grant Poole his point about the Linda problem and assume that few if any people actually commit the conjunction fallacy. Even so, the Linda problem is only one small, albeit well-known, piece of evidence in favor of the cognitive psychologists' conclusions, and the conjunction "fallacy" is just one of many supposed cognitive biases and illusions. So, even if we accept Poole's argument on this point, it goes only a small way in undermining the psychologists' case.

Source: Massimo Piattelli-Palmarini, Inevitable Illusions: How Mistakes of Reason Rule Our Minds (1994), pp. 67-68.

Fallacy: The Conjunction Fallacy

Sanity Check: Here is how Blastland and Dilnot (B&D) check the sanity of this number―I've replaced the amounts given in the book in pounds with dollar amounts, using the exchange rate of two dollars to a pound that the authors used:

Share it out and it equals [$600] per place. Divide it by five to find its worth in any one year (remember, it was spread over five years), and you are left with [$120] per year. Spread that across fifty-two weeks of the year and it leaves [$2.30] per week. Could you find child care for [$2.30] a week?

See what B&D have done: they've taken a number that is too big to evaluate―$600,000,000―and used math to turn it into one that we can understand―$2.30 per week for child care. Even if we assume that some parents won't need child care every week of the year, most adults and nearly all parents will have enough experience to realize that $120 a year won't buy very much child care.

Notice, also, that the only math you need is division: $600 million is first divided by a million to get the amount per place; that's so easy you can do it in your head! $600 per spot is then divided by five to get the amount per year; that's pretty easy, too, though maybe here you'll need a calculator, or a napkin and a crayon. Finally, $120 per year is divided by 52 to get the amount per week; if you're like me you'll definitely need a pencil and used envelope for that step, but now you're done. Even if you're afraid of numbers or think that you're not good at math, you can do this!

Finally, here's what B&D discovered when they questioned a journalist:

When we asked the head of one of Britain's largest news organizations why journalists had not spotted the absurdity, he acknowledged there was an absurdity to spot, but said he wasn't sure that was their job. …[N]ext time someone uses a number, do not assume they have asked themselves even the simplest question. Can such an absurdly simple question be the key to numbers and the policies reliant on them? Often, it can.

Solution to a Hallowe'en Costume Contest Puzzle: Since only one of the three friends was correct in predicting which costume would win the contest, we have three cases to consider:

- Suppose that Alice was right, but Bob and Carol both wrong. Since Bob wrongly predicted that either the mummy or werewolf would win, then neither won. Also, given that Carol incorrectly predicted that either Dracula or the Frankenstein monster would win, neither won. However, this means that all four finalists would have lost the contest, which is impossible.

- Suppose that Bob was right, and Alice and Carol wrong. Since Alice is wrong, that means that the vampire costume won the contest. However, Carol wrongly predicted that either the Dracula or Frankenstein monster would win, which means that both lost. Thus, Dracula both won and lost, which is impossible.

- Finally, suppose that Carol was right, but Alice and Bob wrong. Given that Alice was wrong, then the Dracula costume must have won the contest. Carol was indeed correct that either the vampire or the Frankenstein monster would win. Also, Bob was wrong in predicting that either the mummy or werewolf would win, since both lost. Thus, this is the only consistent possibility and must, therefore, be the truth.

Answer: The Dracula costume won the contest!