Previous Month | RSS/XML | Current | Next Month

WEBLOG

November 30th, 2022 (Permalink)

How many people who died with COVID-19 died from COVID-19?

Only one recommended reading this month:

Alex Berenson, "A veteran medical examiner who reviewed 4000 Covid deaths explains how many were really from Covid (and how many were of healthy people)", Unreported Truths, 11/4/2022

Until September, Brian Peterson served as chief medical examiner for Milwaukee County. … When the coronavirus epidemic began in 2020, Peterson decided to review every Covid-related death in the county―to see for himself who was dying and how. Over the next two-and-a-half years, he made brief reviews of medical records for about 4,000 people that physicians had said died of Covid. … It is possible that Peterson has looked at medical records for more individual Covid-related deaths than anyone else. Here’s what he found.Roughly 20 percent of deaths that physicians certified as Covid-related were not. Some would have been obvious to a layperson―the classic example being a homicide victim who happened to have a positive Covid test. Peterson…noted others, though. An example he gave: someone who died of congestive heart failure and was noted as having shortness of breath following a positive coronavirus test―but no other Covid-related symptoms or treatment. Shortness of breath is a standard symptom in people who die of congestive heart failure. Peterson said he didn’t see how attributing it, or the death, to Covid made sense.

Another 20 percent of deaths came in people with very late-stage cancer or other terminal conditions who did have Covid and symptoms specific to it when they died. Those people would likely have died in days or weeks even if they had not been infected, Peterson said. Still, he added Sars-Cov-2 to their death certificates as a secondary cause, since the virus had hastened their deaths.

In other words, about 40 percent of all the deaths attributed to Covid had either a marginal link to it or none at all. The remaining 60 percent came in people who had positive coronavirus tests, had Covid symptoms and…received Covid-specific treatments, and were not at imminent risk of death when they contracted Covid and died. In those cases, Peterson agreed that the coronavirus was the primary cause of death and reported it that way on their death certificates. …

Stil[l], the people who died of Covid were almost always very unhealthy, he said. …[F]ewer than 1 percent of all the Covid deaths Peterson reviewed had occurred in people who were not already very unwell. …

In early 2021, Peterson’s unwillingness to rubber-stamp Covid deaths became more controversial. The Biden Administration’s “American Rescue Plan” included government reimbursements of up to $9,000 for funeral expenses for Covid deaths. Run through the Federal Emergency Management Agency, the funeral program was retroactive to January 2020.

No one has ever explained exactly why people who die of Covid should get thousands of dollars for their funerals when no one else does. Nonetheless, the program has paid out more than $2.6 billion to more than 400,000 families and still continues.

“The Covid bounty became an issue,” Peterson said. “A lot of families were angry when I wouldn’t put Covid on the death certificate.” His explanations did little to calm them. Doctors had told them their family members had died of Covid. …

Still, Peterson kept filling out certificates as he saw fit. … Then, in the summer of 2022, Peterson made another move that put him even further out of step with his political overlords. He began reporting the Covid vaccination status of the people who’d died on their certificates. Peterson…simply felt the information might be pertinent, he said. …

On Monday, September 19, 2022, Peterson’s time ran out. He was called into an “emergency meeting” with Milwaukee County’s head of human resources and an assistant to the county executive. He did not know what was coming, he said…. In a four-minute meeting, he was told the county [had] two choices for him, resign or retire, he said. He chose to resign…. He had been chief medical examiner for 12 years.

The above is only the evidence of one medical examiner in one county, but it does suggest a systematic incentive to overcount COVID-19 deaths. There is a similar incentive for overcounting cases, as well: under the CARES Act, passed during the Trump administration, hospitals receive an additional 20% compensation from Medicare for treating cases attributed to COVID-191. It's hard to believe that no hospitals respond to this incentive by classifying as many cases as they can get away with as COVID-19, especially given the financial losses they suffered due to the delay of so-called elective surgeries during the pandemic2.

None of this proves that either cases or deaths have been overcounted, but it certainly suggests the possibility. While there are no monetary incentives for undercounting, as far as I know, it's possible that there has been undercounting for other reasons. Have the overcounting and undercounting cancelled each other out? Given that the hysterical over-reaction to the pandemic seems to have finally died down, perhaps now is the time for a systematic review of the number of COVID-19 cases and deaths.

Notes:

- Angelo Fichera, "Hospital Payments and the COVID-19 Death Count", Fact Check, 4/21/2020

- Sourav Bose & Serena Dasani, "Hospital Revenue Loss from Delayed Elective Surgeries", Leonard Davis Institute of Health Economics, 3/16/2021

Disclaimer: I don't necessarily agree with everything in this article, but I think it's worth reading as a whole. In abridging it, I have sometimes changed the paragraphing.

November 27th, 2022 (Permalink)

Q&A: Whataboutism

Reader Joseph C. Welling writes:

Q: I find the taxonomy of fallacies highly instructive. I write to suggest another alias for "red herring" arguments: the "what about" argument. E.g., in a discussion with a 2020 election denier, I was faced with: "What about Hillary Clinton?" That's irrelevant, because even if Hillary had refused to concede the 2016 election, it doesn't make it any more true that Trump won in 2020. But it's often hard to resist temptation to take the bait and start a tangential discussion.

A: You're correct that this is a type of Red Herring argument, that is, one that distracts attention away from the point at issue, just as a "red herring" in a mystery story draws attention away from the guilty suspect. However, Red Herring is a general type of fallacy, with a lot of subfallacies. Using the Taxonomy, I think we can drill down to something more specific.

One of the subfallacies of Red Herring is Two Wrongs Make a Right. This is the mistake of trying to justify something wrong by pointing to another instance of the same type of wrong. As you noted, this is what happened in the Trump versus Clinton example, since Clinton's doing something wrong does not justify Trump doing it as well. In fact, if what Clinton did was wrong, then what Trump did must also be wrong, and vice versa, otherwise the "what about" question just changes the subject. In that case, it would be a simple Red Herring.

I discussed this type of mistake in a previous entry1―in fact, in an earlier Q&A―but without reference to the "what about" locution. At that time, I was not aware that it had been called "whataboutism", and suggested instead the name "tu quoque by proxy". That's not such a good name because it includes Latin―"tu quoque"―and it's far from obvious what mistake it refers to, even if you're familiar with the fallacy of tu quoque.

The term "whataboutism" apparently goes back at least to 19782, giving it some claim to be an established name for the mistake. In the specific context it was used, it referred to a phenomenon I well remember: the Soviet Union and its apologists would deflect all criticisms of its dreadful human rights abuses by pointing to the usually far less dreadful abuses of its critics. This, however, is a clear example of a tu quoque fallacy, since the criticism is turned back against the critic.

The way in which "whataboutism" is defined nowadays3 makes it sound, instead, like an alias for the more general fallacy of Two Wrongs Make a Right. I prefer the latter name since "two wrongs don't make a right" is a familiar phrase―at least it was when I was growing up―and it describes the logical nature of the mistake in a way that "what about" does not, which makes it a good mnemonic. Finally, as we saw with the example from the previous entry, not every occurrence of this fallacy uses the "what about" phrase.

For these reasons, I think "whataboutism" is an alternative name for Two Wrongs Make a Right, a subfallacy of Red Herring.

Notes:

- Q&A, 6/17/2010

- "What about 'whataboutism'?", Merriam-Webster, accessed: 11/26/2022

- "Whataboutism", Merriam-Webster, accessed: 11/26/2022

November 24th, 2022 (Permalink)

A Family Thanksgiving Puzzle

This year, Mr. & Mrs. Baker are planning to have their whole extended family to the house for Thanksgiving dinner. Unfortunately for them, the family is known for its picky and eccentric eaters. Just planning what kind of pies to bake for dessert is a puzzle. Mrs. Baker is planning to bake at least one pie for the meal, but no more than three. Eight of their relatives refuse to touch anything with pumpkin in it, five can't stomach sweet potatoes, and four can't stand either pumpkin or sweet potatoes. Seven are allergic to nuts, so pecan pie can't be the only alternative. Three will touch neither pumpkin nor pecans, and two object to both nuts and sweet potatoes. Only one member of the family is willing to eat all three. Finally, one of the kids even refuses to eat any kind of pie.

How many members of the Baker family are coming to Thanksgiving dinner?

Extra Credit: Assuming that each pie makes at least six slices, what is the minimum number of pies that Mrs. Baker can bake in order to serve at least one slice of pie to all of her guests except for the one fussy kid who refuses to eat pie? What types of pie are they?

If you need help in solving this and similar puzzles, see: Using Venn Diagrams to Solve Puzzles, 1/18/2017, and Part 2, 3/7/2017.

13

Extra Credit Solution: Two pies: pumpkin and pecan.

WARNING: May contain nuts.

November 19th, 2022 (Permalink)

A Post-Election Trump Effect Post-Mortem

The results for the midterm election are, finally, mostly in. Apparently, the people in certain states forgot how to count, or perhaps their schools are so bad that they never learned in the first place. Whatever the explanation, I'm going to go ahead and write about the results even though they are still partial in some states. For example, there is a runoff election for a Georgia senate seat next month1.

Nevertheless, the Democrats have now won 50 seats in the Senate so that they will maintain control of it2. Depending on how the Georgia runoff goes, they may end up gaining a seat, or we will be back where we started before the election, with a 50-50 divided Senate.

Turning to the House of Representatives, the Republicans have now won at least 218 seats, which gives them just enough for control. This is a gain of eleven seats for the GOP, but there are still five undecideds3.

In an entry last month4, I discussed the "Trump effect" hypothesis, which I defined as an average four-point bias against GOP candidates. There was certainly no sign of such an effect in this election; if anything, there seems to have been the opposite effect, as the Democrats outperformed the polls. By exactly how much Democrats beat the polls I don't know, but it doesn't matter for the Trump effect hypothesis, which predicted that it was the Republicans who should do better than expected. Except in certain states, the GOP did not do better than expected, and mostly did worse.

As a sequel to last month's entry, let's look at what eight polling pundits predicted5 and compare it to what actually happened. The only thing that all eight "experts" agreed on was that the Republicans would take control of the House of Representatives, and that's the one thing they were right about. What they disagreed on was exactly how big the Republican majority would be, which still isn't completely decided. However, those pundits who had the temerity to make a prediction thought that the GOP would win at least fifteen seats, and maybe as many as thirty. It's still possible that the party may make it to a gain of fifteen seats, but if they don't then none of the predictions will have come true.

As for the Senate, three of the pundits made no prediction, and one said it was an "open question". Of the four remaining pundits, one simply predicted that the Republicans would win control of the senate; Nate Silver gave the GOP a 54% chance of taking both houses of Congress; Frank Luntz thought that they would win 51 Senate seats; and Scott Rasmussen saw Luntz' 51 and raised it two. So, those who committed themselves were wrong, wrong, wronger, and wrongest.

The only thing that the polling pundits were right about is the one thing that they were unanimous on. So, one lesson of this exercise is that, when the pundits all agree, they're probably right; but when they disagree among themselves, or are unwilling to commit themselves, then they're probably wrong.

As I mentioned last month, this election was not a definitive test of the Trump effect hypothesis, since Trump himself was not on the ballot. Now that Trump has announced his candidacy for 20246, we may see a further test of his namesake effect in two years, though of course a lot can happen between now and then.

A general lesson of this election is that, even when the polls are about as accurate as can be expected, they're little use in predicting the outcome of a close election. Most American elections nowadays are close.

Notes:

- "Georgia Election 2022 (Runoff)", Georgia, accessed: 11/18/2022.

- "Election results, 2022: U.S. Senate", Ballotpedia, accessed: 11/18/2022.

- "Latest Developments, Analysis and Results", The Wall Street Journal, accessed: 11/19/2022.

- Polling All Pundits, 10/15/2022.

- Theara Coleman, "8 Election Day predictions from the nation's leading pollsters ", The Week, 11/8/2022.

- David Jackson, Erin Mansfield & Rachel Looker, "Donald Trump announces his 2024 presidential campaign as GOP debates future: recap ", USA Today, 11/16/2022.

November 14th, 2022 (Permalink)

Untying the Nots1

In a reference book that I was consulting while researching a previous entry2, I came across the following passage. I want primarily to draw your attention to the last sentence in this paragraph, but I give it in full here because the entire context will be important later:

Although there are drawbacks to both the UCR [Uniform Crime Reports] and the National Crime Victimization Survey [NCVS], no major alternative data source exists. Consequently, the challenge for researchers is to choose the data for which inaccuracies are least likely to affect the issue being studied. For some purposes the choice is readily apparent: the UCR more accurately designates types of crime because it uses standard legal definitions; but the [NCVS] gives a better estimate of the total number of crimes, including those not reported to the police. In comparison to the NCS [National Crime Survey], new procedures in the NCVS provide improved data on crime by nonstrangers that are particularly unlikely to go unreported and therefore not to be counted in the UCR.3

Putting aside the alphabet soup, the second clause of the last sentence is particularly hard to fathom: "…[N]ew procedures in the NCVS provide improved data on crime by nonstrangers that are particularly unlikely to go unreported and therefore not to be counted in the UCR." There are four negations―"non-", "un-" twice in a row, and "not"―which make it unnecessarily difficult to understand.

In addition, there's a grammatical error in the sentence that contributes to making it confusing, so let's get that out of the way first. In the phrase "crime by nonstrangers that are particularly unlikely to go unreported", the noun―"crime"―and verb―"are"―do not agree in number. That phrase should be either crimes "by nonstrangers that are", or "crime by nonstrangers that" is, "particularly unlikely to go unreported".

Can the example sentence be rewritten with fewer negations? Having eliminated the unnecessary negations, does the rewritten sentence make sense? Sometimes writers get so tangled up in negations that they say the opposite of what they intended. Given that it makes sense, does the rewritten sentence express what the author apparently intended?

If you want to try untangling the "nots" in the sentence, do so now. Then, when you're ready to check out my attempt, click on the button below.

The best way to approach this kind of task is in a piecemeal fashion. Logically, double negations usually cancel each other, so a good rule of thumb is that there should be either no negations in a simple sentence, or only one. The example, however, is a compound sentence consisting of two simple ones connected by "and therefore". Stated separately, the two simple sentences are:

- New procedures in the NCVS provide improved data on crimes by nonstrangers that are particularly unlikely to go unreported.

- [Crimes that are particularly unlikely to go unreported] are not counted in the UCR.

The four negations are in the words or phrases: "nonstrangers", "unlikely to go unreported", and "not to be counted". There are three negations in each sentence, so it should be possible to reduce that number to at most one apiece, for a total of two for the whole sentence. Let's look at the negated phrases individually:

- "Nonstrangers": These are people you know, such as friends, family, and people you've met. That's a long list, so let's just call them all "acquaintances".

- "Unlikely to go unreported": This is the clearest case of two negations that cancel out. If something is unlikely to go unreported, then it's likely to be reported.

- "Not to be counted": This means the same as "uncounted", but there doesn't seem to be any positive way of phrasing it.

With these points in mind, let's rephrase the simple sentences:

- New procedures in the NCVS provide improved data on crimes by acquaintances that are particularly likely to be reported.

- [Crimes that are particularly likely to be reported] are uncounted in the UCR.

Recombining the two simple sentences into one, we get:

New procedures in the NCVS provide improved data on crimes by acquaintances that are particularly likely to be reported and therefore to be uncounted in the UCR.

This makes sense in isolation, but not in the context of the full paragraph in which it occurred. It would appear that the author got so tangled up in all those negations that he ended up writing something that he didn't mean. If acquaintance crimes are likely to be reported, then they would be counted in the UCR. So, it seems likely that what the author meant was:

New procedures in the NCVS provide improved data on crimes by acquaintances that are particularly unlikely to be reported and therefore to be uncounted in the UCR.

This sentence has two negations, but they are in separate simple sentences joined by a conjunction, so they don't cancel out. Presumably, crimes by acquaintances are unlikely to be reported to the police because people don't want to report family members or friends even when they commit crimes. As a result, they wouldn't be counted in the Uniform Crime Reports. This sentence makes sense in the context of the full passage, which is more than can be said for the original sentence.

Notes:

- Previous entries in this series:

- Untie the Nots, Part 3, 2/1/2010

- Untie the Nots, Part 4, 3/2/2010

- Untie the Nots, Part 5, 2/11/2013

- Untie the Nots, Part 6, 10/1/2013

- Can You Untie the Nots?, 3/24/2016

- Charts & Graphs: The Case of the Missing Murders, 11/10/2022

- Mark H. Maier, The Data Game: Controversies in Social Science Statistics (3rd edition, 1999), p. 105

November 10th, 2022 (Permalink)

Charts & Graphs: The Case of the Missing Murders

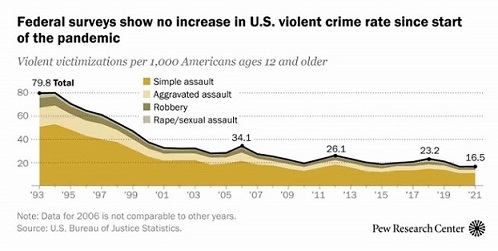

In a chart or graph, what is not shown is sometimes as important as what is shown. Check out the following one1:

|

There are two problems with the title given this chart, a major and a minor one. Let's start with the minor one: The title of the chart trumpets the lack of change in the "violent crime rate" since the beginning of the pandemic, yet the year from 2020 to 2021 is only represented by the last notch on the chart. If a big deal is to be made about the time period of the pandemic, then the chart should begin at 2019 or 2020 and end at 2021, the most recent year with complete data. Given that so few years are involved, it would be just as well to forgo a graph and simply compare the rates among the years. As it is, it's impossible to read the yearly rates from the chart itself, except for 2021, though the accompanying article tells us that there were no statistically significant changes in the rate in those years2.

The major problem with the title is its claim that there was no increase in the "violent crime rate" during the pandemic. There are only four types of violent crime counted: simple and aggravated assault, robbery, and rape or sexual assault. Conspicuously missing is murder, which is not only a violent crime, but "the most serious form of violent crime", according to the article itself.

One question to always ask about the statistics of social problems is: How are the terms defined? To count something you must first define it. What counts as a "violent crime"? However one might define the phrase, surely murder should count as a violent crime. Yet the title of the graph appears to be defining "violent crime" so as to exclude murder.

The article that accompanies the graph does explain that the statistics on which the graph is based come from a survey of victims of violent crime. Obviously, victims of murder could not be surveyed. This is understandable, but it doesn't justify redefining "violent crime" to exclude murder.

To its credit, the full article does discuss the increase in the murder rate over the last few years:

Both the FBI and the Centers for Disease Control and Prevention (CDC) reported a roughly 30% increase in the U.S. murder rate between 2019 and 2020, marking one of the largest year-over-year increases ever recorded. The FBI’s latest data, as well as provisional data from the CDC, suggest that murders continued to rise in 2021.2

It's certainly good news that such crimes as assaults, robberies, and rapes have not increased nationwide, but the increase in homicides is anything but good news. Given that the murder rate increased by almost one-third in a single year, whereas the rates of other violent crimes did not diminish, the rate of such crimes did increase since the beginning of the pandemic, though probably not by much. Thankfully, despite its recent rise, murder remains a comparatively rare crime, so that even such a large increase would not raise the overall rate of violent crime a lot.

A problem with the chart itself, rather than just its title, is that the timeline begins at 1993, the peak year for violent crime in the U.S. over the last fifty years or so3. As a result of starting at its peak, the chart gives the impression of a declining violent crime rate. This is certainly a correct impression for the first half of the chart, but a closer look shows that the steady but slowing decline levels off about the year 2010, when the decline stalls and the rate flattens. This is an example of a graphing trick that we've seen before4: if you want to show a downward trend, start the x-axis at a local peak; if you prefer to show an upward trend, start it at a local trough.

Since the article that accompanies the chart mentions the increased murder rate, it's not likely that anyone who reads it will come away with a false impression, but the chart itself has been passed around on "social" media out of context. Graphs should be able to stand or fall on their own, and not require a great deal of written explanation in order to prevent misinterpretation. Whatever can be misinterpreted will be.

Notes:

- "Federal surveys show no increase in U.S. violent crime rate since start of the pandemic", Pew Research Center, 10/31/2022

- John Gramlich, "Violent crime is a key midterm voting issue, but what does the data say?", Pew Research Center, 10/31/2022

- See: Mark H. Maier, The Data Game: Controversies in Social Science Statistics (3rd edition, 1999), Figure 6.1, p. 101.

- See: Charts & Graphs: Why, Oh Why?, 2/2/2021

November 7th, 2022 (Permalink)

Skepticism About Conspiracy Theories

Quote: So ubiquitous are JFK assassination conspiracy theories that there's even a joke featuring a conspiracist who dies and goes to heaven, where God offers to reward him for a life well lived by answering any question he'd like to ask.

CONSPIRACIST. "Who actually killed John F. Kennedy?"

GOD. "Lee Harvey Oswald, acting alone…."

CONSPIRACIST. "This goes even higher than I thought."1

Title: Conspiracy

Comment: This title is a bit imprecise since, as far as I can tell, the book is not primarily about actual conspiracies, though it does discuss some of those, but about conspiracy theories (CTs).

Subtitle: Why the Rational Believe the Irrational

Comment: The subtitle helps to clarify that the topic of the book is not conspiracies in general, but irrational theories about them. I'm sure that we've all wondered how otherwise rational people can believe some silly CT, even those of us who believe in some CTs ourselves. For any given CT, no matter how foolish, there always seems to be another that is even more so.

Author: Michael Shermer

Comment: Shermer is the man behind Skeptic magazine, and the author or co-author of several previous books. Among his earlier books that I've read are: Why People Believe Weird Things, such as conspiracy theories, and Denying History, which is about Holocaust denial, a form of conspiracy theory. I recommend both.

Date: 2022

Summary: Based on the table of contents, the book is divided into three parts:

- Why people believe CTs: This part includes a short history of CTs (Chapter 2), a discussion of three types of conspiracism (Chapters 3 & 4), and a case study of a CT, specifically, the "sovereign citizens" CT, which I'd never heard of (Chapter 5).

- How to tell if a conspiracy is real: Chapter 6 provides a "conspiracy detection kit"―I don't know what this is and have doubts as to whether such a thing is even possible, but I haven't read this chapter yet. The next two chapters concern specific CTs: 9/11 CTs and the ones claiming that Obama was not born in the U.S. (Chapter 7), and the most famous CT of all, namely, that President John F. Kennedy was assassinated by a conspiracy and not, as God said above, by Lee Harvey Oswald, acting alone (Chapter 8). Next, Shermer deals with what he claims are real conspiracies (Chapters 9 & 10), including, I guess, the real conspiracy to assassinate the Archduke Franz Ferdinand that started World War I (Chapter 10).

On the latter event, were there any actual CTs about it? As far as I know―which is, admittedly, not very far―the conspiracy was discovered almost immediately, and there was no time for a CT to develop. CTists often criticize CT skeptics, such as myself, on the grounds that there are real conspiracies, which is something that no one denies. The problem is that real conspiracies are almost impossible to cover up, and are usually revealed as such immediately, which is one reason that I doubt that a "conspiracy detection kit" is even needed. Similarly, CTists will point to historical conspiracies as evidence of CTs that have come true; but in most cases, there were no CTs about real conspiracies because there was no time for them to develop. There are real conspiracies, yes, but are there any real conspiracy theories?

At the risk of repeating myself2, as the phrase is commonly used, a "conspiracy theory" is not just a theory about a conspiracy. For a theory to count as a CT, there needs to be at least an alleged cover-up of the conspiracy. This is why, for instance, there is no CT about the assassination of Julius Caesar by a conspiracy, since the conspirators made no attempt to hide their deed but killed him openly. Calling an historical account of Caesar's assassination a "conspiracy theory" would be misleading due to the negative connotations of the phrase.

- How to talk to CTists: This part has two chapters of advice on how to communicate with CTists. I don't know whether Shermer thinks that one can reason CTists out of their conspiracist beliefs, and my experience certainly suggests otherwise, but I'm curious as to what he recommends.

The Blurbs: The book is positively blurbed by social psychologist Carol Tavris and geographer Jared Diamond, among others.

General Comment: In what follows, keep in mind that I haven't read the entire book, and am basing this on only those parts I have read, which are primarily the "Apologia", "Prologue", and Chapter 1.

Here's Shermer on what he calls "constructive conspiracism":

The assumption by most researchers of and commentators on conspiracy theories is that they represent false beliefs, which is why the term has become a pejorative descriptor. This is a mistake, because, historically speaking, enough of these theories represent actual conspiracies. Therefore, it pays to err on the side of belief, rather than disbelief, just in case. With a lot at stake, especially one's identity, livelihood, or even life―which was the case during the Paleolithic environment in which we evolved our conspiratorial cognition―it is often better to assume that a conspiracy theory is real when it is not (a false positive), instead of believing it is not real when it is (a false negative). The former just makes you paranoid, whereas the latter can make you dead.3

Being paranoid can also make you dead.

…[A] global factor in understanding why people believe conspiracy theories is that enough of them are true, so it pays to err on the side of belief rather than skepticism. For example, the assassinations of Julius Caesar and Abraham Lincoln indeed were conspiracies, as were Watergate and Iran-Contra. … Such genuine conspiracies are frequent enough that we tend to believe a great many more theories that are not real. … Constructive conspiracism derives from a game-theoretic model for why making a Type I error in assuming something is real when it is not (a false positive) is better than making a Type II error in assuming something is not real when it is (a false negative). … We don't always assume the worst, but if enough information points in the direction of conspiracy, or trusted sources of information assert that a conspiracy is afoot, we're more likely to believe it, just in case―better to be safe than sorry.4

I'm not quite sure what Shermer is trying to do with this notion of "constructive conspiracism": Is he just explaining the otherwise hard to understand fact that people so often believe in CTs? In other words, we can suppose that human beings evolved in an environment in which it paid to be suspicious of others, and this explains the obvious attractions that CTs have for us. However, the world we live in has changed so much that such suspiciousness is now maladaptive and dangerous. Or, is Shermer arguing that it is still adaptive to be a little bit paranoid? His language in the above quotes makes it sound like this is what he is saying, but I'm reluctant to think so for the following reasons.

I'm skeptical that there are any true CTs, let alone enough of them to justify erring on the side of belief. Shermer obviously must think that it's at least possible for a CT to be true since he writes of true and false CTs5, but does he really think that enough are true to make it rational to presume that they are true in general?

It's not just my impression that, at the very least, most CTs are false, it's easily provable. Most CTs are inconsistent with other CTs; for instance, Shermer mentions the CT that Princess Diana was assassinated, as well as another CT that she faked her own death6. At most, one of these CTs could be true; more likely, both are false.

Faced with inconsistent CTs about the same event, how are we to react? We cannot rationally believe both, but we can rationally disbelieve both. If we're supposed to be "constructively" conspiracist, which should we believe? Flip a coin, perhaps? Shermer mentions that surveys have shown that those who believe one of these CTs are actually more, and not less, likely to say they believe the other6. If this is what "constructive conspiracism" leads to, then we must reject it.

For another example, there is not just a pair of inconsistent CTs about the assassination of JFK, but scores if not hundreds of them. Specifically, some claim that Oswald was a "patsy" or "fall guy" who was framed for the murder, whereas others say that he did indeed shoot at JFK, but that another shooter fired from the "grassy knoll". Also, such CTs differ on who the alleged conspirators were, with some fingering the CIA, others the Mafia, still others Cuba, and so on, ad nauseum. Like the Princess Di CTs, these CTs are mutually inconsistent. From a purely probabilistic point of view, the way to bet is against any given JFK CT, since the odds are against it.

Of course, believing or disbelieving something based simply on probability is a rather weak form of reasoning. The real question is whether to put any effort at all into looking into a given CT. We all need to make at least some preliminary evaluation of the plausibility of a claim to justify investigating it. Is it worth spending any time and effort to investigate the "sovereign citizens" CT which, as I mentioned above, I know nothing about? Probabilistic considerations lead me to believe that it isn't. There are thousands of CTs, and you could spend the rest of your life just studying the hundreds of books written about the JFK assassination alone. Would it be worth doing? Not likely.

Another issue is Shermer's claim that for our "Paleolithic ancestors" it was better to err on the side of believing CTs rather than disbelieving them. I don't see what the evidence is for this, but perhaps the part of the book that I haven't read gives it. Given that humans have always been social animals, such suspicions are likely to have been disruptive of communities, especially when those suspicions were unfounded. So, false positives may have been just as bad as false negatives, even for our ancestors. However, even if it was adaptive for our ancestors, I'm pretty sure that it no longer is.

For just one example, Shermer mentions that the QAnon CT played a role in the storming of the Capitol building by protesters on the sixth of January last year7. One of those who did so was shot dead by a Capitol policeman as she was trying to climb through a broken glass door8, the only person who died directly due to the incident9. Irrational beliefs can easily lead to irrational actions.

For these reasons, I'm skeptical about the value of "constructive conspiracism", even as an explanation of the attraction that CTs hold for many people. However, I look forward to reading the remainder of Shermer's book, and perhaps he'll convince me otherwise.

Disclaimer: I haven't finished reading this book yet, so can't review or recommend it, but its topic interests me and may also interest my readers. Also, the above criticisms are based only on reading samples of the book made available for free by the publisher. If I have inadvertently misrepresented any of Shermer's views, I apologize to him and to my readers.

Notes:

- Pp. 168-169. All page citations are to the hardback version of the new book.

- See the Reader Response to: Check it Out, 12/6/2013.

- P. xi.

- P. 14. Paragraphing suppressed.

- For instance, the title of Chapter 6 is "The Conspiracy Detection Kit: How to Tell If a Conspiracy Theory is True or False".

- P. 68.

- P. 2.

- Ellen Barry, Nicholas Bogel-Burroughs & Dave Philipps, "Woman Killed in Capitol Embraced Trump and QAnon", The New York Times, 1/7/2021.

- Robert Farley, "How Many Died as a Result of Capitol Riot?", Fact Check, 3/21/2022.

November 3rd, 2022 (Permalink)

Casual or Causal?

The same book that supplied last month's example of easily confused words1 also contained the following sentence: "Just plain data analysis will never offer a definitive proof of casual claims, but nor will it pretend to."2 What is a casual claim? A claim made casually, in passing, without emphasis? The context in which the word occurs shows that "casual" is not what was intended. Here is the sentence preceding the example sentence: "It stresses that avoiding logical fallacies, rather than avoiding violations of statistical assumptions, is the key to not drawing false causal conclusions from the analysis of one's data."2 Clearly, the word intended in the example was "causal" and not "casual".

While the spelling of these words only differs by the order of two adjacent letters, they could hardly differ more in meaning. "Causal", of course, means "having to do with causation"3, so a "causal claim" is a claim that some event caused some other event. "Casual", in contrast, means "informal" or "relaxed"4, as in casual Friday at work.

The "casual" for "causal" misspelling is presumably a causal result of rapid typing followed by quick scanning of text with the naked eye, which is not likely to catch the substitution since they look so much alike to the casual glance. As is true for many of the mix-ups we've looked at over the last several months, the mistake usually goes only one way: "causal" is what is meant, but "casual" is what is written. I've seen it several times over the years prior to coming across it recently and being reminded of it.

I checked the example sentence in an old copy of Microsoft Word and some online grammar checking programs. Impressively, a couple of the checkers caught the "casual" mistake, even suggesting "causal" to replace it! However, Word thought the sentence was just fine. If you rely on a spelling or grammar checker, I would suggest testing whether it can detect the difference between these two syntactically very similar, but semantically very different words.

Notes:

- See: Shear or Sheer?, 10/2/2022

- Gary M. Klass, Just Plain Data Analysis: Finding, Presenting, and Interpreting Social Science Data (2008), p. xx

- "Causal", Cambridge Dictionary, accessed: 11/3/2022

- "Casual", Cambridge Dictionary, accessed: 11/3/2022